Vibe Coding for Silicon: Dream or Destination?

What happens when AI meets RTL — and whether we’re ready for it.

The phrase “vibe coding” took off when Andrej Karpathy coined it to describe the emerging way developers work with large language models (LLMs): describe the intent in plain English, get back boilerplate Python, iterate, tweak, and ship. The loop is fast, and the friction is low. You don’t need to hold every syntax rule in your head. You just describe what you want, and the model responds.

In the pure software world — JavaScript, Python, Swift — this mindset is spreading rapidly. ChatGPT can now scaffold a React app, build a Flask server, or generate an Airtable-compatible API, and developers are increasingly acting more like editors than authors. In this new workflow, developers often don’t even read every line. As long as the output compiles, passes unit tests, and does what it should, that’s good enough.

The core idea: You vibe with the machine, not command it.

Can That Paradigm Cross Into Silicon?

But can this same intent-over-syntax mindset work in chip design?

RTL is fundamentally different. Hardware description languages like SystemVerilog, VHDL, Chisel, and SpinalHDL represent not procedures, but structure — finite state machines, deeply pipelined datapaths, concurrency, event-driven semantics. Bugs here can mean silicon re-spins, costing millions and delaying products by quarters.

Unlike pure software code, hardware designs don’t get “deployed and patched.” Once etched into silicon, mistakes are permanent. That’s why the traditional RTL flow is cautious, layered, and manually verified.

Yet the desire is real. A generation of engineers has grown up watching LLMs write working Python and ask: “Why not Verilog?”

The answer, until recently, was: “It’s just too risky.” But now the risk equation is starting to shift.

What an RTL Vibe Loop Would Require

A true “vibe coding” experience for RTL would require a full-loop co-design system:

Prompt → RTL → Sim → Correction, integrated and continuous

Models that can output RTL and the corresponding testbench, assertions, and even formal properties

Seamless feedback from waveform viewers, synthesis tools, and verification engines into the prompt cycle

Imagine this: an engineer prompts an AI, “Design a FIFO with two clock domains, built-in overflow detection, and reset logic.” The LLM outputs parameterized SystemVerilog along with assertions and a UVM-style testbench. It runs in simulation and passes checks. If not, the AI refines the code based on simulation output.

Academic prototypes are already proving this is feasible. In May, researchers released Spec2RTL-Agent, a GPT-4 based framework that takes textual specifications and generates Verilog with automated testbenches and coverage goals. Meanwhile, RTL++ uses iterative refinement between LLMs and simulation tools to improve accuracy across design targets.

Verification and synthesis — once reserved for the “back-end” — now must be embedded in the front-loop to close the vibe cycle.

Tool Flows, Teams, and Trust

Even if the tech works, there’s another hurdle: trust.

RTL engineers have a high bar for accepting code they didn’t write. Unlike in web dev, where debugging a broken form takes minutes, debugging metastability or timing failure can take weeks. “If I didn’t write it, how can I trust it?” is not paranoia — it’s a survival instinct.

Domain-specific languages like Amaranth HDL, PyMTL, and nMigen are increasingly seen as bridges. They allow more abstract design entry, mix Python and RTL logic, and are arguably more compatible with LLM prompts. But they still fall short of full “vibe” loops.

The real leap will come when toolchains don’t just assist RTL entry — they become co-design partners.

Workflow Comparison: Old vs. New

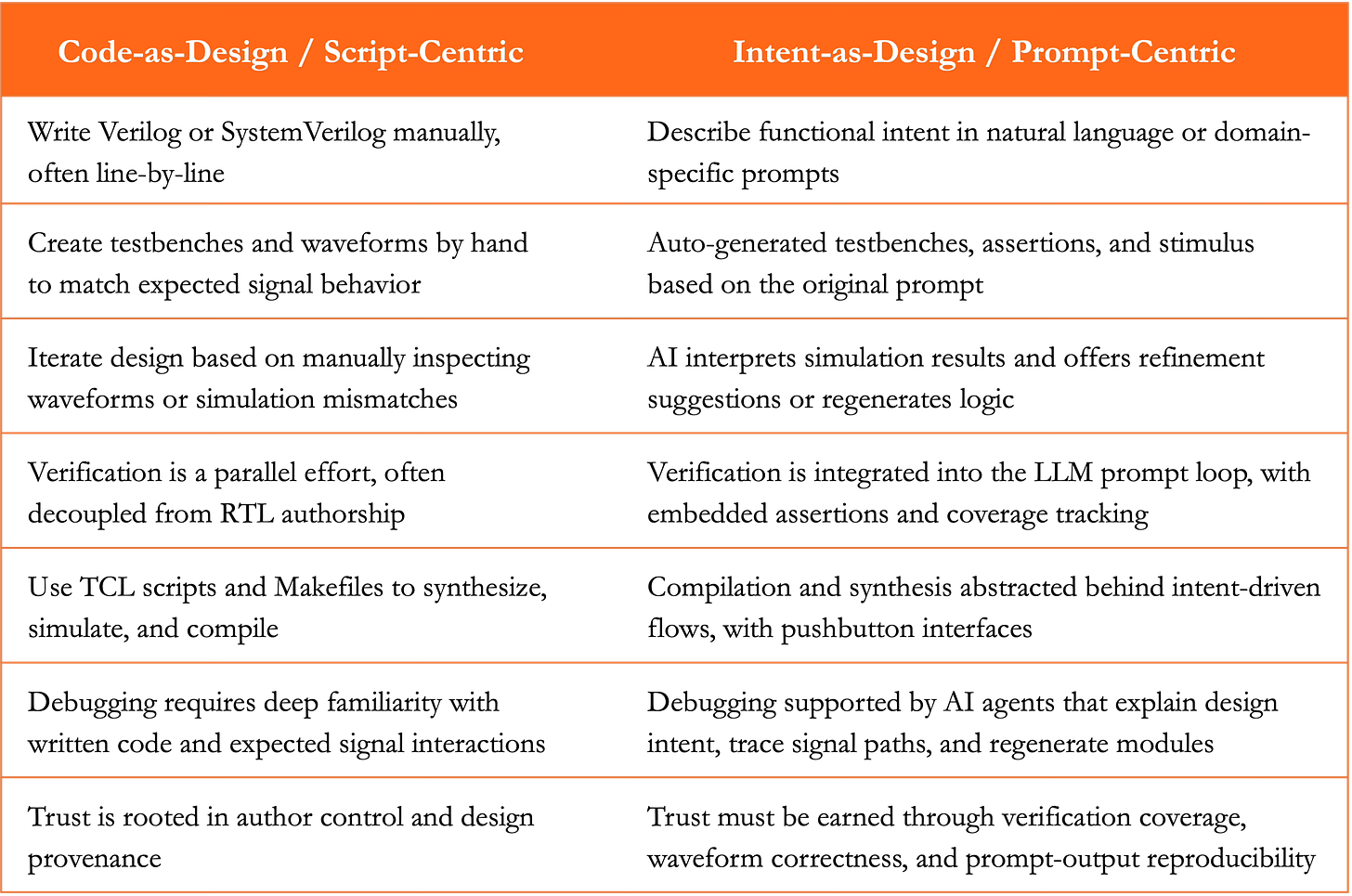

While vibe coding in software is often described as a UX shift — from keyboard to co-pilot — in RTL, the shift is more foundational. It questions how engineers express design intent, how tools translate that intent into hardware structures, and how correctness is validated. Traditional RTL workflows are linear, toolchain-centric, and manually intensive. Design intent is buried in lines of code, often shaped by the quirks of synthesis tools or legacy IP integration.

But what if the entry point into design could change?

Prompt-native RTL flips the flow. It starts not with HDL code, but with a design goal: “Build me a dual-port memory with ECC, clock gating, and AMBA AXI compatibility.” It doesn’t stop at generating code. The model suggests testbenches, timing constraints, formal checks, and even synthesis scripts — or at least scaffolds for all of them. Each component is explainable, debuggable, and most importantly, revisable via a new prompt.

Here’s a side-by-side comparison of the two paradigms:

This is more than a UX shift. It redefines the entry point, the role of the engineer, and the composition of the design team. RTL engineers shift from low-level coders to intent architects, verification teams move from simulation scripting to coverage supervision, and toolchains begin to anticipate rather than merely respond to human decisions.

Importantly, this transformation does not eliminate traditional RTL skills — it reframes them. The old workflows will persist for ultra-critical designs, constrained legacy IP, or designs at the edge of architectural innovation. But increasingly, the mainstream path — especially for AI accelerators, edge inference blocks, or subsystem IP — will flow through intent-driven pipelines.

Trust and Friction: What RTL Engineers Fear Most

The most profound shifts in engineering come not from better tools, but from redefined roles. As AI enters the RTL design loop, the transition is not from manual to automatic, but from editor to agent. That transition is as much psychological as it is technological.

Silicon designers are not just code authors. They are risk managers, root-cause debuggers, and system architects. In this world, trust is earned through visibility. When an AI suggests logic, it must also articulate why, show how it will be verified, and provide a path for the engineer to intervene. Without that transparency, the promise of automation will stall at the threshold of production sign-off.

This is where the next-generation design environments must lead: toward explainable agentic systems that keep the human firmly in the loop. These systems must not only synthesize RTL, but also reason about assertions, surface corner cases, and integrate simulation feedback as part of the creative loop. They must reduce iteration friction without diluting accountability.

Put simply: the shift is not to “AI that codes,” but to “AI that collaborates.” And for that collaboration to scale, trust must be built into the very architecture of the design process — not bolted on afterward.

Strategic Tension

The promise of vibe coding for RTL isn’t just an interface upgrade — it threatens to flip the economics of chip design in favor of hardware designers and against a status quo built on iteration and friction.

Keep reading with a 7-day free trial

Subscribe to Insights from the Inside to keep reading this post and get 7 days of free access to the full post archives.